Over the last decade, as AI has seen more progress and inclusivity in various domains of life, people are raising questions about ethical issues in artificial intelligence.

While AI is seeing positive growth in the healthcare and engineering field, there is no denying that AI comes with ethical implications as well.

Just like any new technology has its pros and cons, AI also has its fair share of embracers and skeptics.

Here are some of the biggest ethical issues in artificial intelligence.

AI Taking Over Jobs

Any new technology that supersedes an old one causes a hue and cry among the users or creators of the older technology. For example, when television replaced radio, people thought it was the end of the radio. When ATM machines were invented, bankers feared losing jobs.

However, neither the radio died out, nor the bankers lost their jobs. The new inventions merely brought something new and different to the picture.

Similarly, AI is not here to replace human jobs completely, rather assist in mundane and everyday tasks.

It is true to some extent that AI will take over some of the jobs that can be automated, for example, chatbots as customer service agents. However, AI cannot fully replace the human experience. It cannot act as a customer service agent at the level a human would. It won’t fully understand each person’s subjective situation, and therefore won’t be a viable solution for every domain.

Our economic system works on compensation for the work that workers put in. If the companies replace a part of their workforce with AI to cut down on costs, it can leave people with no jobs.

Remember that AI is there to assist with processes and make lives easier, not replace humans.

Privacy Issues

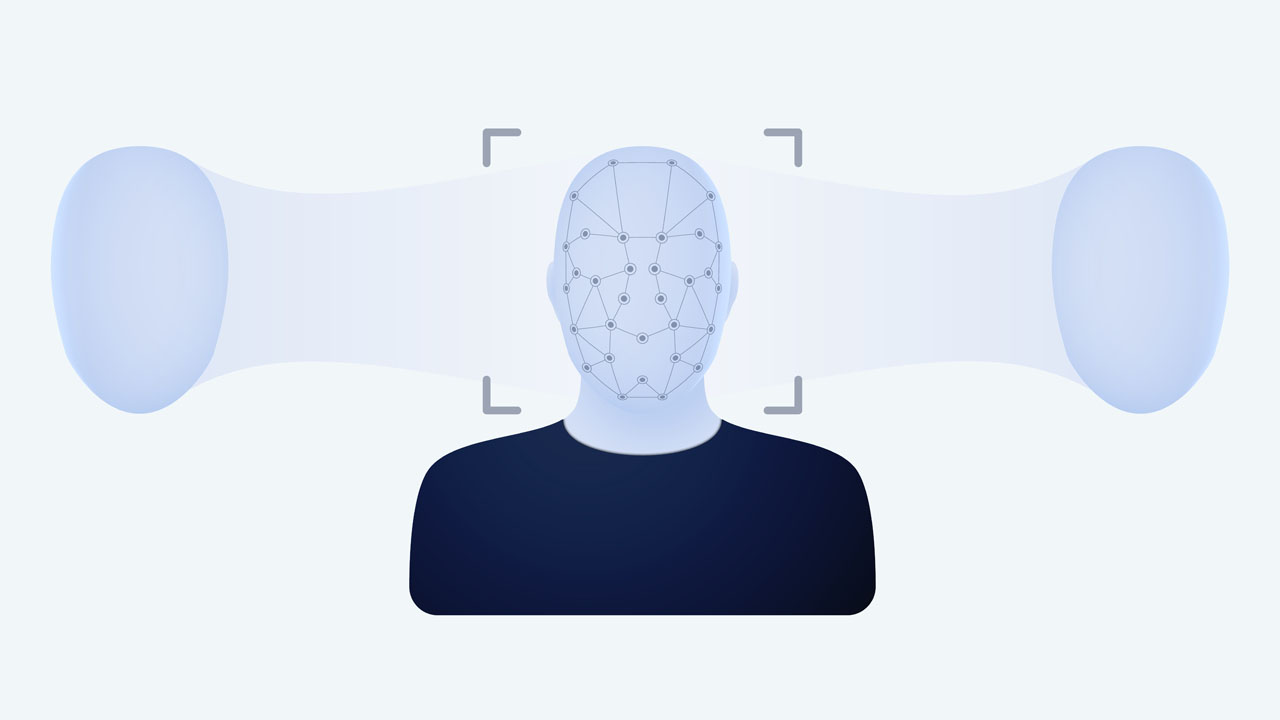

A lot of ethics-related talk around AI is due to the privacy issues concerns around the technology. Consider facial recognition, people fear their personal images can be stored, monitored, and used without consent.

However, facial recognition technology also comes with its benefits. For example, criminals or missing people can be tracked to see where they were last seen.

The healthcare industry is also using facial recognition to check in and check out patients efficiently, thereby reducing the manual process. It also allows hospitals to control access to specific areas of the facility.

The Aspect of Trickery

With the latest technologies, AI is becoming more sophisticated and compelling at modeling human behavior and interactions.

Eugene Goostman – a bot portrayed as a 13-year old Ukranian boy has competed in a number of Turings tests including one of the events held in London in 2014 where he successfully managed to trick a third of people into believing that they were talking to a real human being.

Similarly, in Google’s I/O conference held in 2018, a Google Assistant made out a call that was strikingly similar to how a human being would talk. The assistant asked the right questions and included the right amount of pauses in the speech.

The person on the receiving end of the call could not distinguish if the call was from an AI or a real human.

An ethical dilemma presents itself: Does Google or the creators of Eugene Goostman have the obligation to tell people that they are speaking to an AI?

Can such technology create mistrust and confusion among humans?

These are questions yet to be explored and answered by the experts.

AI biases

While AI is capable of processing large amounts of data beyond human capacity, it cannot always be trusted to present unbiased results.

After all, AI was created by humans and humans have biases and prejudices that can be depicted in their programs.

It is, therefore, important for creators to use diverse training data and regularly audit their models for biases.

Weaponization

AI systems are not bound by borders. They can traverse beyond terrains and into foreign countries’ territory. This makes its implications highly dangerous.

AI can be used in the development of autonomous weapons — built with sensors and algorithms — to engage targets without human intervention. It can also be used to identify and target potential criminals.

However, due to the ethical concerns around weaponization using AI, international debates and talks are ongoing on the subject.

The Ethics of Using Deepfake Online

Deepfakes are online AI programs where a real human’s voice or face can be used to pose as a particular person or a public figure. Celebrity voice changer is one such software that allows users to change their voice to sound like a celebrity’s.

In March 2019, a cybercriminal tricked the CEO of a UK-based energy company into sending a fraudulent transaction of €220,000 into an overseas account.

The CEO was convinced he was speaking to his boss. The cybercriminal used a deepfake software that mimicked the voice of the CEO’s boss and tricked him into sending the money.

While the use of deepfake technology also raises ethical concerns, if used properly, it can benefit a large number of people.

Consider the example of Val Kilmer – the famous actor in Top Gun. In 2017, the actor lost his voice to throat cancer. A UK-based firm Sonatic used AI to recreate his voice. Val Kilmer was ecstatic. “People around me struggle to understand when I’m talking. But despite all that I still feel I’m the exact same person. Still the same creative soul,” he said in an emotional YouTube clip.

If used ethically, like in Val Kilmer’s scenario, AI voice changers and online deepfake software can open doors to opportunities and growth.

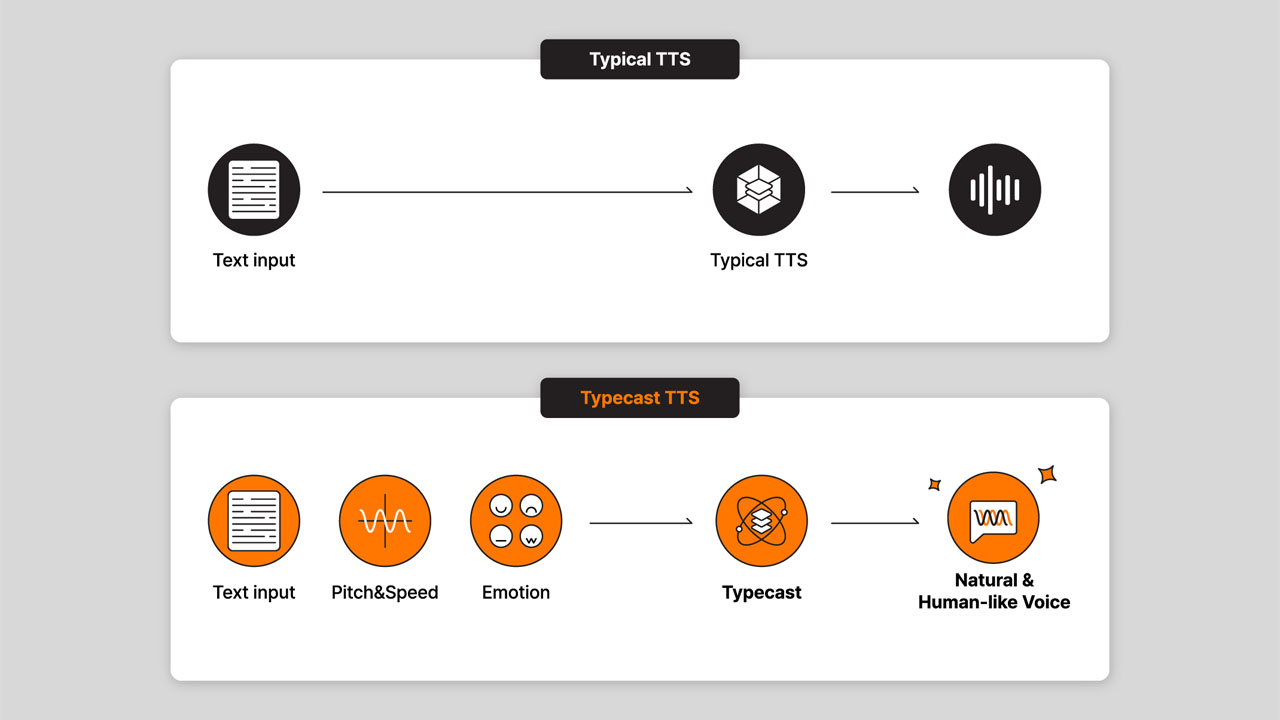

Realtime voice cloning

To produce realistic-sounding content, creators can use realtime voice cloning to replicate the voices of public figures and celebrities.

Creators simply need to record a clip and upload it into the AI software. The software replicates the desired voice and also captures the nuances in speech, such as pitch, pauses, and expression.

Realtime voice cloning software allow users to type in text that be converted into an audio or video speech. Talk Obama To Me is an example of a voice cloning software that puts together several Barrack Obama clips using text to speech to create a realistic-sounding content featuring Obama.

Using AI ethically

Education and Awareness

Ethical issues in artificial intelligence are real, however, like with any other technology, it is important to understand both sides of the picture.

While AI’s ethical implications are debatable, it is important for organizations and individuals to do their due diligence when adopting AI technologies. Educating people on the potential benefits of AI can open room for constructive dialogue around the technology.

Organizations can create their own set of ethical guidelines around AI to promote safe practices.

Being transparent and secure

When dealing with customers and users, it is important to be transparent about the way their data will be used and how you, as a business, will benefit from that data. Building trust is important in cultivating healthy relationships and in creating a positive sentiment around your business.